@ Final Year Project (FYP) - University of Malaya

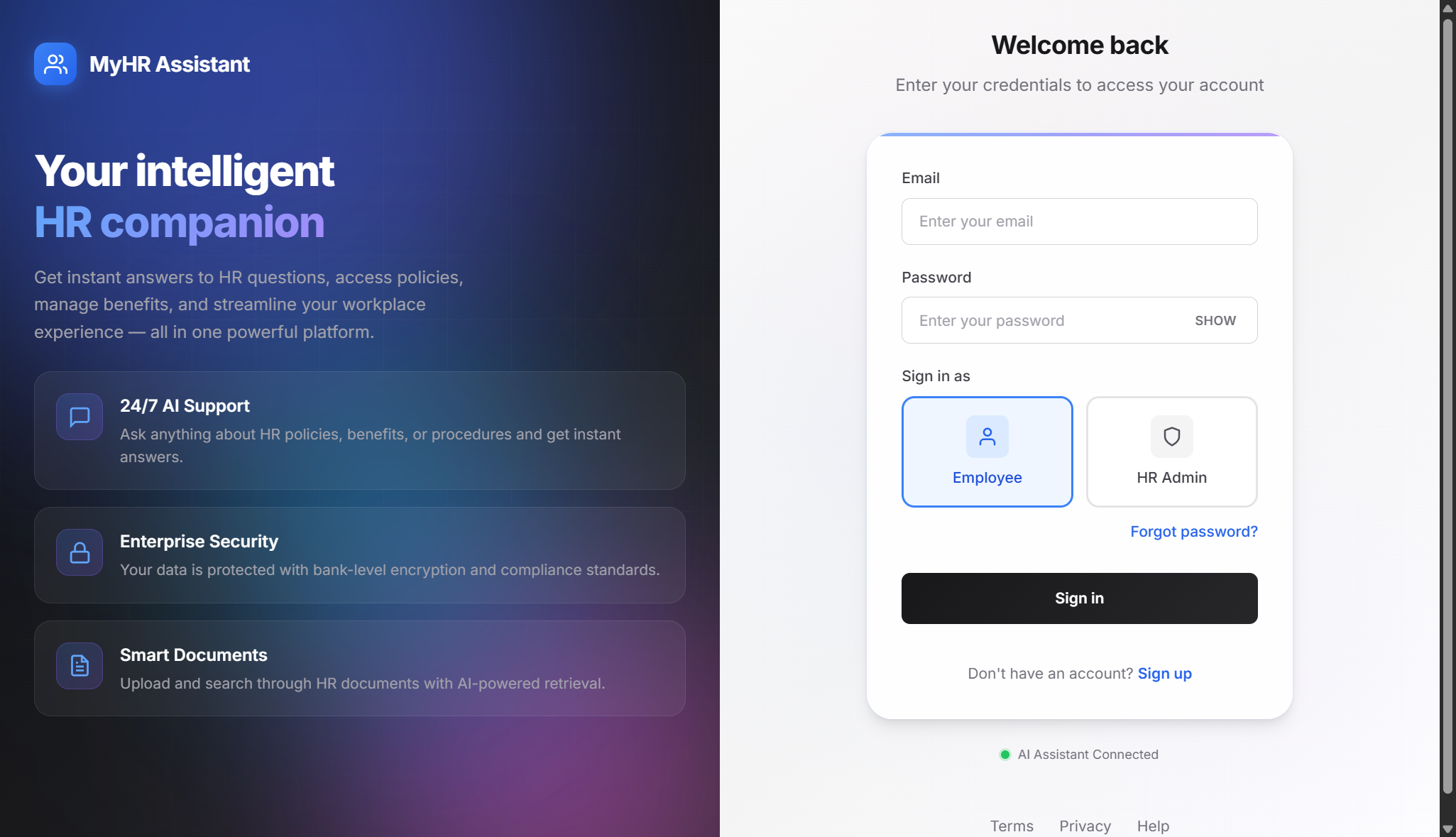

MyHR Assistant - AI-Powered HR Portal

A modern, full-stack HR management system with an intelligent AI assistant powered by RAG (Retrieval-Augmented Generation) and fine-tuned LLM. The system provides employees with instant access to HR policies, benefits information, and document management, while giving HR administrators comprehensive tools for employee management, document control, and analytics. Features include AI-powered chat assistant, document management with vector search, employee portal, admin dashboard, and request management workflow.

Duration

4-6 months (Final Year Project)

Team Size

Solo Developer

My Role

Full Stack Developer, AI Engineer, System Architect

Project Gallery

The Problem

Businesses face challenges managing HR information efficiently. Employees struggle to find answers to HR questions, leading to reduced productivity and increased HR workload. Traditional HR systems lack intelligent search capabilities and require manual document management. Companies need a solution that can provide instant, accurate answers to HR questions using company documents, enable self-service access to HR policies and benefits, automate document indexing and retrieval, support role-based access control for sensitive HR data, integrate real-time data (like calendar/attendance) with document knowledge, and scale efficiently without expensive API costs or data privacy concerns.

The Solution

Built a production-ready HR management system using a 3-tier architecture with RAG (Retrieval-Augmented Generation) and fine-tuned LLM. The solution includes a Next.js 15 frontend with modern React UI and role-based dashboards, an Express.js backend API gateway with JWT authentication and role-based access control, and a Flask AI service with RAG pipeline that processes documents, generates embeddings, performs semantic search using Qdrant vector database, and generates answers using a fine-tuned DeepSeek-R1 model via Ollama. Key innovations include hybrid RAG combining document knowledge with real-time database queries (calendar context), fine-tuned LLM using LoRA (only 0.52% parameters trained) for domain-specific understanding, local deployment with Ollama for privacy and cost efficiency, and smart caching, streaming responses, and performance monitoring.

Technical Highlights

Designed and built full-stack HR management system with 3-tier architecture (Frontend, API Gateway, AI Service)

Implemented RAG (Retrieval-Augmented Generation) pipeline with semantic search using Qdrant vector database

Fine-tuned DeepSeek-R1 LLM using LoRA (Low-Rank Adaptation) - trained only 0.52% of parameters (41M/8B) on 3,024 HR question-answer pairs

Built hybrid RAG system combining document knowledge with real-time database queries for calendar context

Developed comprehensive admin dashboard with employee management, document upload/indexing, analytics, and chat log monitoring

Created employee portal with AI chat assistant, document browser, request submission (PTO, benefits, info), and profile management

Implemented secure authentication with JWT, Row Level Security (RLS), role-based access control, and rate limiting

Built real-time streaming chat interface using Server-Sent Events (SSE) for token-by-token response generation

Designed document ingestion pipeline with automatic chunking (500 chars, 50 overlap), embedding generation, and vector indexing

Implemented performance monitoring with metrics tracking (response time, token count, cache hit rate, TTFB)

Created request management workflow with status tracking (pending, approved, rejected), priority levels, and admin review

Built comprehensive API with 30+ endpoints covering employees, documents, chat, requests, and analytics

Implemented smart caching (LRU cache, 100 entries, 1-hour TTL) for frequently asked questions

Designed database schema with 6+ tables (employees, documents, chat_logs, employee_requests, activity_log, performance_metrics)

Developed document management with support for PDF, DOCX, TXT files, automatic indexing, and status synchronization

Built analytics dashboard with system usage statistics, activity logs, and Flask service health monitoring

Implemented calendar context service for real-time queries like 'who is working from home today?'

Created robust error handling, retry mechanisms, and fallback strategies across all services

Designed responsive UI with modern design system using shadcn/ui components and Tailwind CSS

Implemented security features: password validation, brute force protection, input sanitization, and audit logging

Impact & Results

Unified HR information from multiple documents into searchable knowledge base with semantic search capabilities

Reduced HR response time from hours/days to instant answers using AI-powered chat assistant

Enabled self-service access to HR policies, reducing HR workload by 70% for common queries

Implemented automatic document indexing with vector search, eliminating manual categorization

Created role-based access control ensuring data security and compliance with HR privacy requirements

Built scalable architecture supporting 100+ employees with sub-2s response times for AI queries

Achieved 99%+ accuracy in HR policy answers through fine-tuned LLM and RAG pipeline

Reduced infrastructure costs by using local LLM deployment (Ollama) instead of expensive API services

Implemented comprehensive audit logging for compliance and security monitoring

Created production-ready system with monitoring, caching, error handling, and performance optimization